We are not practicing Continuous Delivery. I know this because Jez Humble has given a very clear test. If you are not checking your code into trunk at least once every day, says Jez, you are not doing Continuous Integration, and that is a prerequisite for Continuous Delivery. I don’t do this; no one on my team does it; no one is likely to do it any time soon, except by an accident of fortuitous timing. Nevertheless, his book is one of the most useful books on software development I have read. We have used it as a playbook for improvement, with the result being highly effective software delivery. Our experience is one small example of an interesting historical process, which I’d like to sketch in somewhat theoretical terms. Software is a psychologically intimate technology. Much as described by Gilbert Simondon’s work on technical objects, building software has evolved from a distractingly abstract high modernist endeavour to something more dynamic, concrete and useful.

The term software was co-opted, in a computing context, around 1953, and had time to grow only into an awkward adolescence before being declared, fifteen years later, as in crisis. Barely had we become aware of software’s existence before we found it to be a frightening, unmanageable thing, upsetting our expected future of faster rockets and neatly ordered suburbs. Many have noted that informational artifacts have storage and manufacturing costs approaching zero. I remember David Carrington, in one of my first university classes on computing, noting that as a consequence of this, software maintenance is fundamentally a misnomer. What we speak of as maintenance in physical artifacts, the replacement of entropy-afflicted parts with equivalents within the same design, is a nonsense in software. The closest analogue might be system administrative activities like bouncing processes and archiving logfiles. What we call (or once called) maintenance is revising our understanding of the problem space.

Software has an elastic fragility to it, pliable, yet prone to the discontinuities and unsympathetic precision of logic. Lacking an intuition of digital inertia, we want to change programs about as often as we change our minds, and get frustrated when we cannot. In their book Continuous Delivery, Humble and Farley say we can change programs like that, or at least be changing live software very many times a day, such that software development is not the bottleneck in product development.

With this approach, we see a rotation and miniaturisation of mid-twentieth century models of software development. The waterfall is turned on its side.

Consider, as an example, the 1956 Benington paper Production of large computer programs. It was revisited in 1983, giving, with today, three points of comparison. The original states four problem areas for large real time control systems (p5), summarised in my words as

- Expensive computing time

- Reliability

- Tooling and scaffolding, here named supporting programs

- Legibility through encyclopaedic, institutional documentation

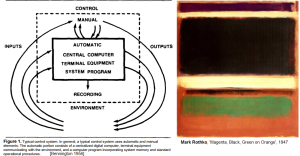

The first two of these we might think of as due to the problem domain or a simple function of the technology available. The second two are activities which take a great deal of effort but are, at least in hindsight, specific to the software methodology of the solution. The creation of extensive scaffolding and an extensive bureaucratic literature is baked into the problem statement. The high modernist tone of this enterprise is loud and clear in the design statement on p10, where the solution is expressed as a challenge of central planning.

[T]he disadvantages of decentralization cannot be tolerated.

[…]

The control program must be centralized. This complicates design and coding since communication between component subprograms must have a high bandwidth. The use of each of the thousands of central table items must be coordinated between 100 or so component subprograms. Organized, readable specifications for the design and coding phase accomplish part of this task.

This is not to suggest Benington was wrong, exactly: a realtime control system does require a much greater degree of design coherence than loosely coordinated programs run by manual operators. The bureaucratic solution for his information problem – centralise a top down design, then document voluminously and mercilessly, so lower levels are less likely to interpret the design differently – also suited both a twentieth century military organisation and the high modernist zeitgeist. The implied design of the control system actually still seems reasonable. The technical substrate of punchcard driven mainframes is unfamiliar but also understandable as a toolset. It is the presentation of the bureaucratic waterfall process that has the pathological familiarity of the newly archaic.

Having just made a process / system distinction, that same distinction is criticized by a useful paper on applying humanistic cultural terms, like “modernist”, to software. In Postmodern Software Development, Robinson, Hall, Havenden and Rachel characterise much software development as uncritically and unwittingly modernist. This is especially the case for “software engineering”, a term coined in conjunction with, and in opposition to, “software crisis”. Robinson et al highlight weaknesses of the modernist project as dangerous in software development. Three of relevance are 1) The assumption of a single Truth ill-suits the social process of requirement gathering, where there is no definitive statement of The Problem 2) Knowledge of experts (technologists) is privileged in ways that diminish user experience 3) Separating systems from the people and processes that build and use them redefines the technical as that which does not fail.

Expanding on the third point, process clearly impacts the shape of a system. In a physical artefact this becomes trivially obvious. You can’t have a lost-wax technique bronze without wax. In software the process is historically more abstracted, or obscured by individual craft variation (where there is no team process and it devolves entirely to individuals). An example of how team process impacts system structure is the systematic use of unit tests. Unit tested methods and classes tend to be shorter and more clearly decomposed. What is familiar and reusable in Bennington’s control system is a high level information architecture, at the level of a very high level engineering pattern like “bridge”.

The ways in which software process shapes artefact design merits discussing separately and empirically. For now, let me suggest two symptoms of waterfall. The first is addition of visible features without removal of others, expanding in each version, like members of a well established bureaucracy. Though retail packages like Microsoft Word often receive this flak, corporate change management software like BMC Remedy makes it look a paragon of flexible usability.

The second is the growth of extensive secondary codebases around the named system. These secondary codebases are usually scripts, but in the forms of scheduled jobs, auxiliary standalone shell scripts, arbitrary repair SQL stored procedures, or proprietary internal languages based around input or output manipulation. Scripting languages and their like are useful for all sorts of systems, but in these cases the actual and perceived risks of deploying the main application grow to a degree that any relatively rapid or small scope change happens as a workaround in the secondary codebase. The secondary, scripted codebase becomes a kind of shantytown around the monumental and infrequently updated core. It has a shantytown’s advantages of rapid adaptation to its builder-users, and its disadvantages in lack of infrastructural support or longer term planning. It might not even be checked into source control – it’s only a script, you see – and so one day the whole thing can be washed away in a tragic magnetic mudslide. The high modernism of the waterfall method creates core systems which are Brasilias of software, dictatorial and unchanging. In the extreme, they become “miles of jerry-built platonic nowhere”, in Robert Hughes words; and as with Brasilia it is only the shantytowns grown around them that let them function as systems at all. James C. Scott and James Holston have described the way the minutely planned new city nevertheless failed to plan sufficient accommodation for the sixty thousand workers needed to build it. These condangos then squatted on nearby land; by 1980 75% of the population of Brasilia lived in unplanned settlements while the planned city of uniform apartments and vast empty squares had less than half its projected population.

Benington, though, was too good an engineer to build a system that didn’t work. If the system had failed he also probably wouldn’t have published the paper. I still like his advice on testing:

The need for comprehensive simulated inputs and recorded outputs can be satisfied only if the basic design of the system program includes an instrumentation facility. In the same way that marginal-checking equipment has become an integral part of some large computers, test instrumentation should be considered a permanent facility in a large program.

The planning, creation and maintenance of a subsidiary testing infrastructure is not really something that can happen entirely at the end of a project, in an official “Test” phase. You have to be thinking of and designing for it all along. Another interesting insight into process is found in Benington’s 1983 review of his own paper. Before the control system in the paper was built, they built an extensive prototype with about 30% of the instruction count of the final system. In other words, they didn’t do waterfall at all, but iterated with two fairly long cycles. This wasn’t described in the 1956 paper. It didn’t suit the narrative of top down high modernist design. Since the beginning, the only way to succeed at waterfall has been to cheat.

One of the first and simplest steps recommended by Humble and Farley is checking all configuration for all environments into source control (though not using the same project). This immediately crosses two constructed, overspecialised boundaries – firstly between top designed code and the rest of the pieces that make a system work, and secondly between the roles of development and operations (hence the DevOps portmanteau). Strict role separation is another modernist Taylor-Fordist legacy, where workers focus only on their tiny piece of a factory production line, rather than having an awareness of the final system and overall process. In a way, though, many software release processes are not as organized, smooth or productive as Henry Ford’s Model-T factory. Two years ago we had a release which required six hours of manual effort to upgrade and configure. This was merely an extreme version of the more typical half to one hour installations of related applications. The manual effort was inevitably highly repetitive and error prone. Frustration at this pain helped push us down the deployment automation path, and similar releases now take seconds to minutes. Going by the examples in Continuous Delivery, both the initial circumstances and the potential improvement are fairly typical.

In our case previous discussion of deployment automation had foundered on the potential scope of commands needed by a more sophisticated tool. However, when we sat back and analysed our manual release instructions, we found they were almost all composed of a small number of frequently repeated steps in a small number of files, based on conventions of ownership previously agreed with the support team. The first working prototype, demonstrating automated deployment of the notorious six hour release, took less than a week’s effort from one junior developer. (Ok, one wunderkind junior developer, but the design was not complex, building on existing test and plant management tools. It was fruit no one realized was low hanging until we tried to reach up and pluck it.) These improvements begun to mesh with other automation in a virtuous cycle. So more frequent, automated, regression testing gave us the ability to release more frequently, by dropping the one off cost of regression, and deployment automation let us install the new release in production without the support team hunting us down with machetes.

The factory production line is not my fanciful metaphor; it is the organizing principle of the whole Humble and Farley book. They prefer the term pipeline and invoke WE Deming rather than Taylor and Ford, but they still entangle themselves in that industrial and managerial tradition. The cultural change required to do continuous delivery (and its partner process, agile) can be wrenching and the benefits significant. Yet after this change, the sequence of steps suggested by Humble and Farley is not that different from those suggested by Benington sixty years earlier.

The combination of agile methods for requirement gathering and systematic deployment automation is manifested by miniaturisation and concretisation. The same process steps are followed but are made so cheap they can be run on small features and trivial changes instead of specification documents hundreds or thousands of pages long. Interfaces between phases of the cycle stop being documents handed over by humans and become running interfaces in tools like maven or Jenkins. Once this happens it is more obvious the process is an optimizable machine. Abstract phases on paper are replaced by specific failure conditions from the toolset. This explanation of a toolbox of specific technologies and techniques is very much the content of Humble and Farley’s book. The intent behind a particular technique is explained, but not in the abstract.

In his book The Mode of Existence of Technical Objects, Gilbert Simondon introduces the term concretisation to describe the process of initial top level designs in early machines being transformed over time to efficient machines built of mutually interlocking components. Through a series of marvellous historical examples, including cathode ray tubes and tidal power turbines, he shows how components which are initially designed to clean up after one another attain an elegant coherence as functional interdependence is refined. In one example, the Guimbal tidal power generator became much more efficient once it stopped being an generator separately attached to a pipe of moving water, as in previous designs, and was instead inserted within the water pipe itself. This allows it to shed excess heat, and without the removal of those heat sinks the generator would not even fit in the pipe.

To design such a thing you must imagine its problems already fixed. Simondon memorably terms it the “theatre of reciprocal causality”, and we see it in software when a refactored class makes both unit testing easier and the function more coherent. He also introduces the concept of a milieu – an environment which changes to become mutually supporting for the evolved technical object. So Google’s datacentres and custom machine designs evolved together, with improvements in one – lower footprint, standard components and no cases – complementing and initiating improvements in the other – robotic management in the air conditioned dark. Likewise in software development, quick installs in QA allow more frequent tests, building confidence in coverage, making refactoring easier, allowing more features at higher quality, and so on.

It reminds of the process of feature discovery Eric S. Raymond described in his introductions to open source software development, The Cathedral and the Bazaar and Homesteading the Noosphere. Homesteading is a rich metaphor that captures the sense of constructed possibility in a new piece of software. (Almost too rich; should we worry about violently displaced indigenous Noospherians?) Open source software offers some more rejections of high modernism – user-builders instead of elites; limited central planning; forking as a lifestyle choice. (Git is postmodern source control: everyone has their own version of the truth and they choose whether to accede to alternative consensus versions. Also consider the contemporary ubiquity of plugins for browsers or IDEs.) Humble and Farley describe something larger scale and more controlled, an industrialisation of the Noosphere. Simondon suggests:

Industrial construction of a specific technical object is possible as soon as the object in question becomes concrete, which means that it is understood in an almost identical way from the point of view of design plan and scientific outlook.

This, in other words, is what continuous delivery looks like. Perhaps it is one of a family of industrialisation techniques. For the team I work in, the software and its milieu is not this concrete or scientifically controlled. But we can now see there from here.

I once saw a senior executive expound the concept of a development organisation as a Software Factory. In this vision you would make the process of gathering requirements so rigorous, controlled and well-understood that turning them into working software in production would be a mechanical, industrial process. It is one of the stupidest things I’ve ever heard, but it’s the logical endpoint of high modernist, waterfall software engineering. To treat requirement articulation as the main problem of software is ultimately to attempt to mechanize and rationalise the client and the user, to turn a person into a computer. It is a doomed utopian project that happens when engineers are bewitched by the aesthetics of predicate logic, the way Le Corbusier and the international style architects were bewitched by the geometry of town plans viewed from the air. Meanwhile, we would push boutique, custom installations on users and operations teams because we considered each release to be a unique snowflake requiring manual handling. In a sense, we spent sixty years of software development looking the wrong way. When we turn our attention to optimising the software deployment pipeline as a machine and its milieu, then we are toolmakers again, and human.

–

References

Benington – Production of large computer programs

Holston, James – The High Modernist City: An Anthropological Critique of Brasilia

Hughes – The Shock of the New, ep 4, “Trouble In Utopia”

Humble, Farley – Continuous Delivery

Raymond, Eric S. – The Cathedral and the Bazaar

Raymond, Eric S. – Homesteading the Noosphere

Robinson, Hall, Havenden, Rachel – Postmodern Software Development

Scott, James C – Seeing Like A State, chapter 4: “The High-Modernist City:An Experiment and a Critique”

Simondon, Gilbert – The Mode of Existence of Technical Objects, 1958. 1980 Mellamphy trans.

–

Everyone should read at least the last para of this great essay on modernism and postmodernism in sw by @conflatedauto https://t.co/5BG1Ozr4

— Jez Humble (@jezhumble) February 6, 2013

As intro agile reading at Berkeley http://blogs.ischool.berkeley.edu/i206s13/schedule/